Overview

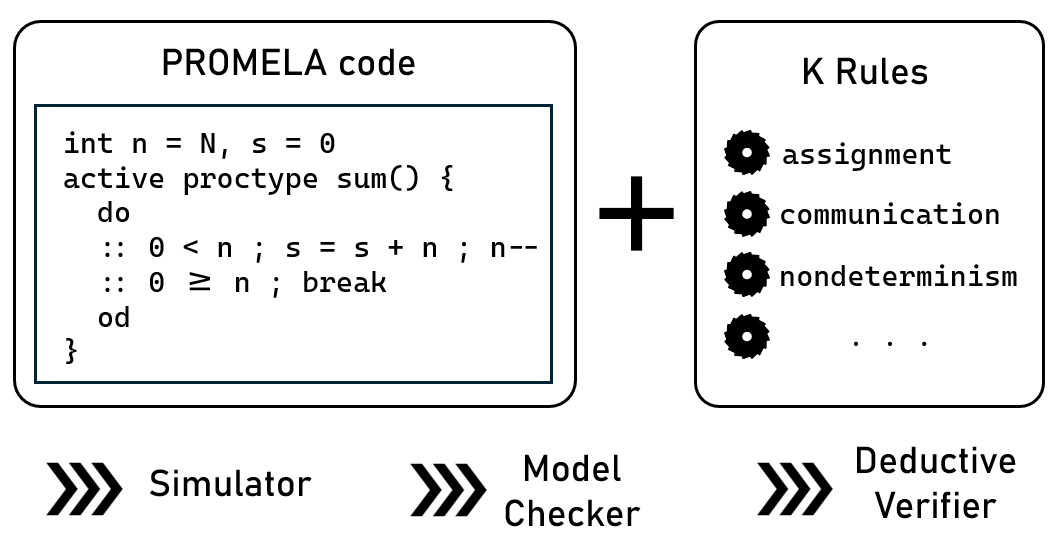

Logic and Algorithms for Software Verification.

Software is everywhere, but it can be buggy, unpredictable, and vulnerable to attacks. The goal of software verification is to ensure the reliability, security, and safety of software. In this research, we study automated algorithms for software verification, based model checking, computational logic, and satisfiability modulo theories (SMT).

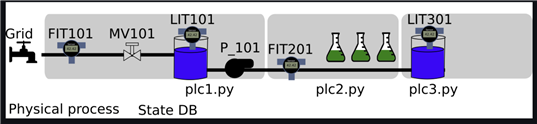

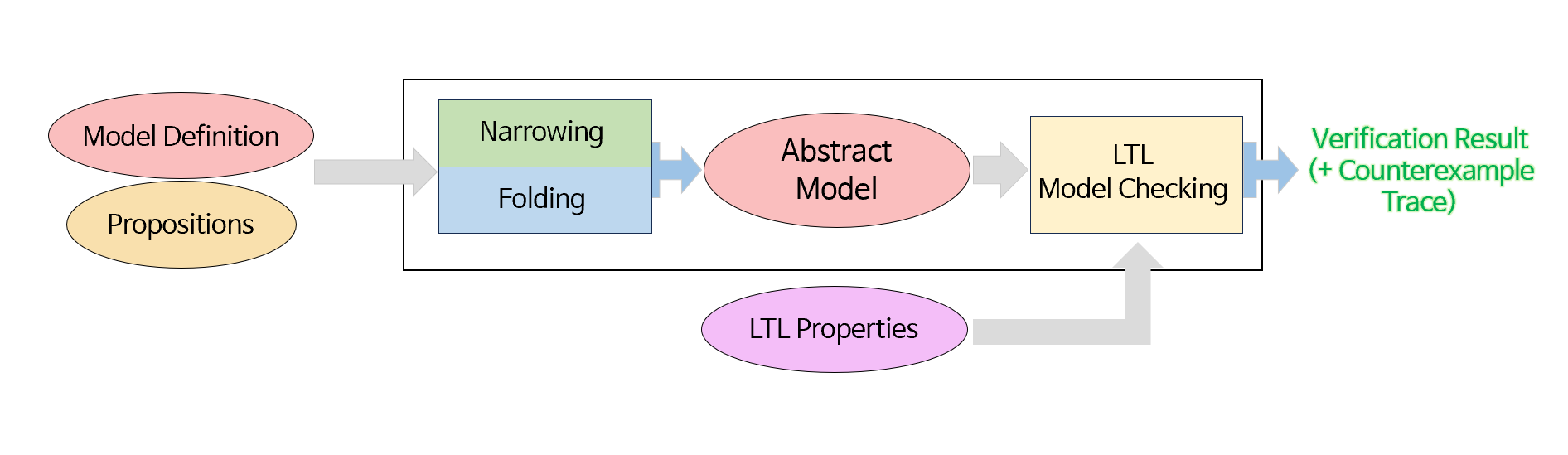

Modeling and Verification of Autonomous Cyber-Physical Agents.

Autonomous cyber-physical agent systems, such as self-driving cars and drones, must meet strong safety requirements. The goal of this study is to develop techniques to model and verify these systems before actual implementation, which can avoid possible accidents during prototype testing.

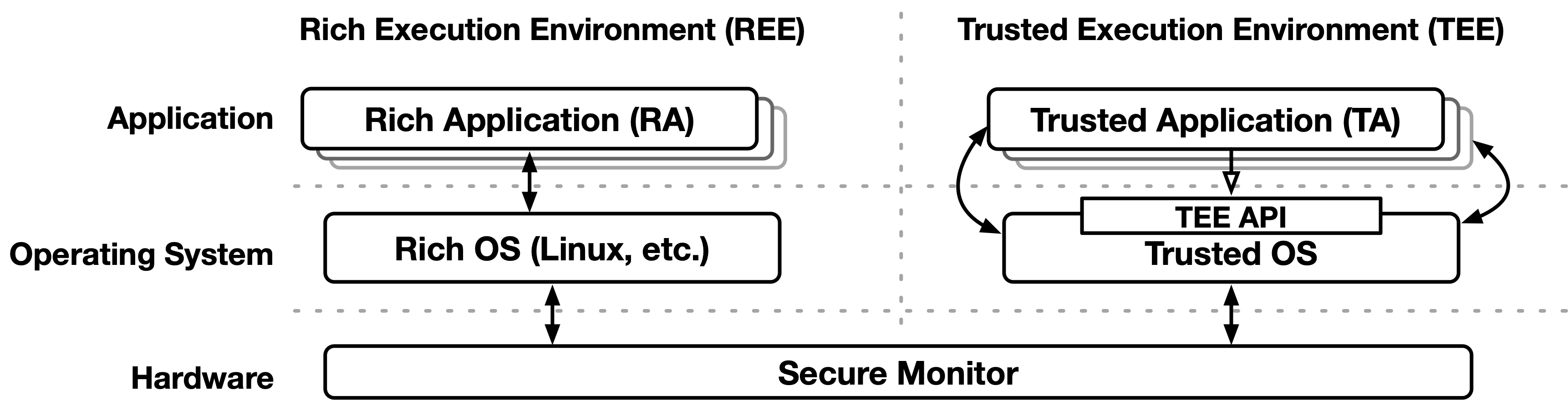

Automated Analysis of Safety-Critical AI Software.

AI-based technologies such as deep neural networks are being applied to safety-critical applications, e.g., self-driving cars and air traffic control systems. The subject of this research is to develop new techniques to prove the safety requirements of AI-based systems, to prevent accidents or security problems caused by software errors.