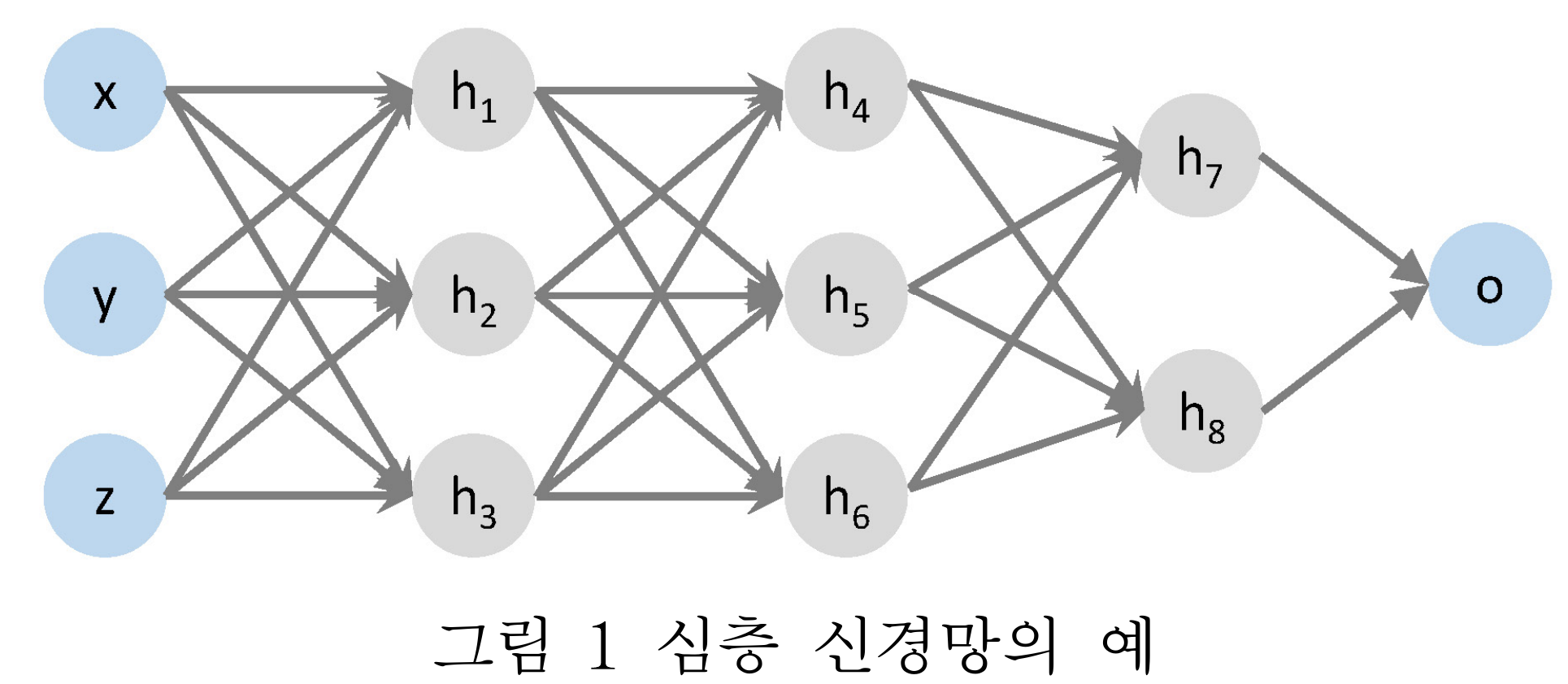

Layered Abstraction Technique for Effective Formal Verification of Deep Neural Networks

Abstract

Deep learning has performed well in many areas. However, deep learning is vulnerable to errors such as adversarial examples. Therefore, much research exists on ensuring the safety and robustness of deep neural networks. Since deep neural networks are large in scale and the activation functions are non-linear, linear approximation methods for such activation functions are proposed and widely used for verification. In this research, we propose a new technique, called layered abstraction, for non-linear activation functions, such as ReLU and Tanh, and the verification algorithm based on that. We have implemented our method by extending the existing SMT-based methods. The experimental evaluation showed that our tool performs better than an existing tool.

Main Problem

A major factor in neural network verification is the presence of activation functions like ReLU(Rectified Linear Unit), Sigmoid, and Tanh that provides non-linearity to the DNN, with such non-linear nature making the problem NP-hard. One such solution to this is linear approximation (i.e. abstraction), which simplifies the verification problem, making the problem more manageable for large-scale neural networks. Various abstraction techniques has been proposed, each with their own trade-offs between precision and performance with

- Increasing performance, leading to shorter verification time but increases the possibilities of spurious counterexamples (false positives)

- Increasing precision, leading to less wrong answers but increases the computation cost.

Contributions

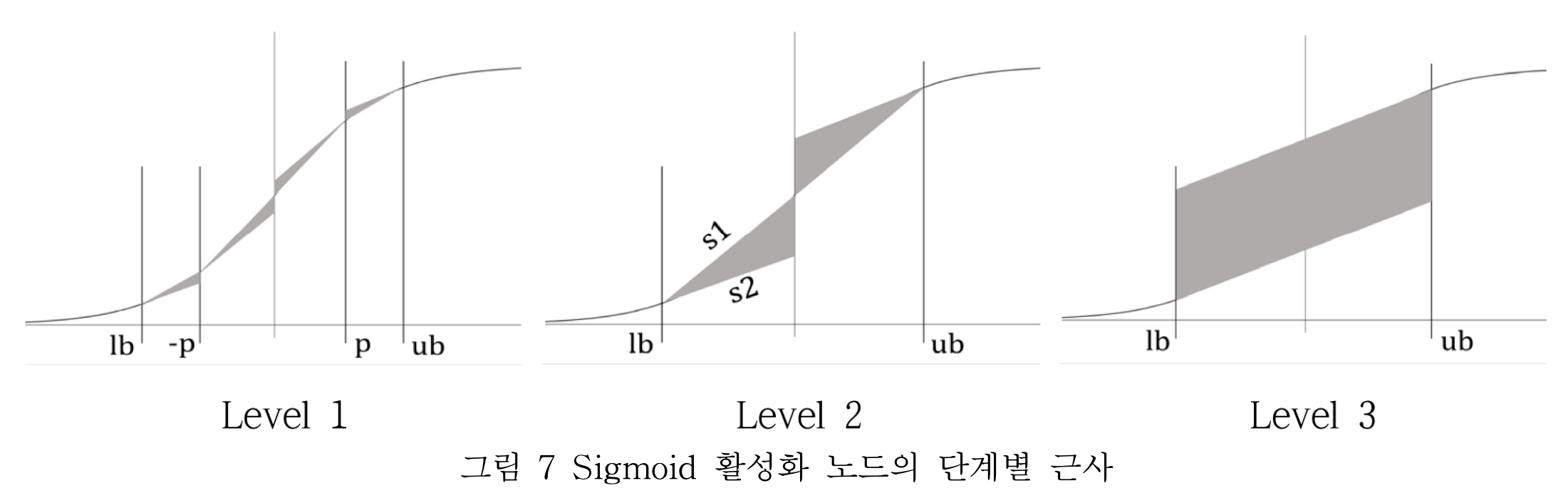

To mitigate above trade-offs, we propose a new abstraction technique called layered abstraction technique. The hierarchical technique provides a tiers (i.e. levels) of abstractions each with different degrees of precision and performance.

- Level 1: highest precision & lowest performance

- Level 2: mid precision & mid performance

- Level 3: lowest performance & highest precision

Then during the verification process, we apply the abstraction with the highest level first, and gradually apply lower levels of abstraction every time a spurious counterexample is encounted.

Experimental results

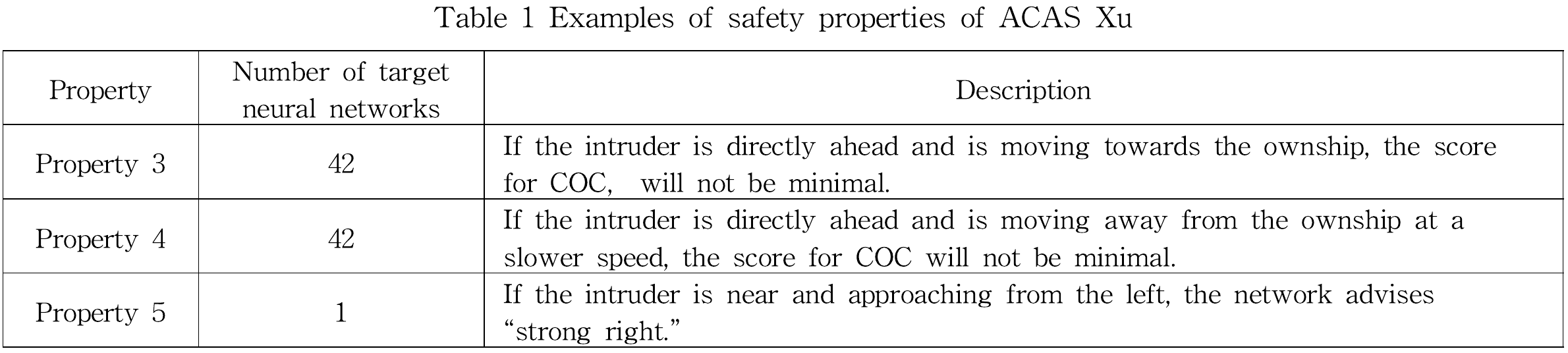

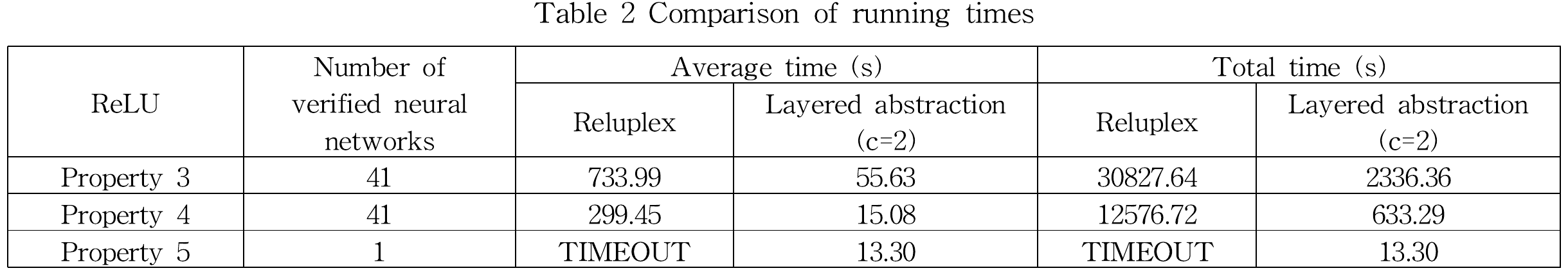

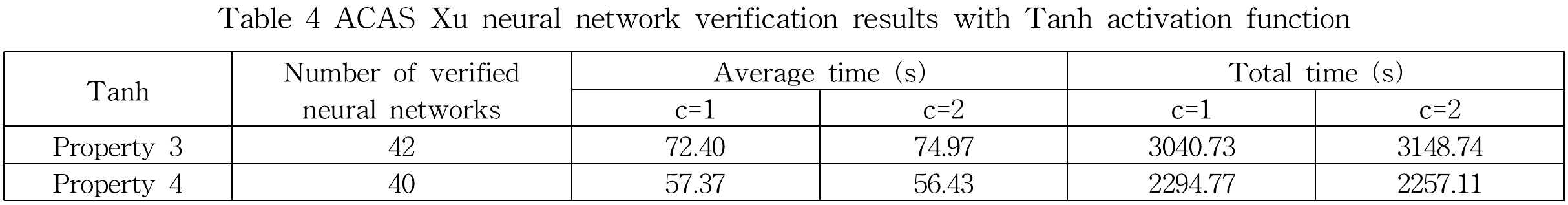

In this page we will show two experimental results to demonstrate the capabilities of our approach. One, by comparing our approach against Reluplex on verifying AcasXu neural networks in terms of verification time. And, two by verifying AcasXu neural networks with Tanh activation functions, previously unverifiable.

1. Properties Verified

2. Comparison against Reluplex

3. Verification of Tanh NN

For more details, refer

- J. Yeon, S. Chae, and K. Bae, Layered Abstraction Technique for Effective Formal Verification of Deep Neural Networks, Journal of KIISE, Vol. 49, No. 11, Nov 2022 [paper]

- J. Yeon, S. Chae, and K. Bae, Layered Abstraction for Formally Verifying Deep Neural Networks, Korea Software Congress (KSC), Dec 20-22, 2021 [paper]